vmk0 ping loss with vSphere

We face more regularly vmk ping loss issues with Cisco Nexus and Meraki switches. Nearly 25% of the network traffic is lost. After investigating it from every corner we found the issue and I hope to save you some time with this blog post.

Issue

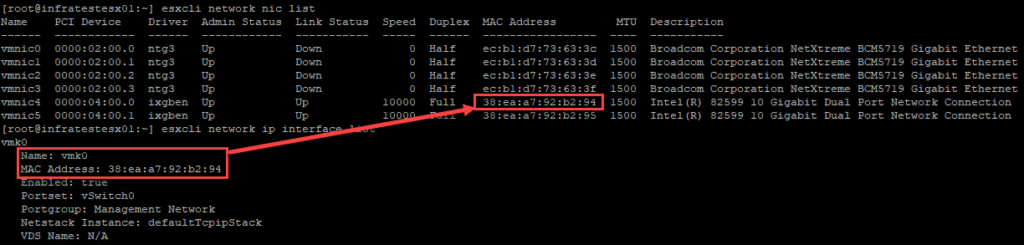

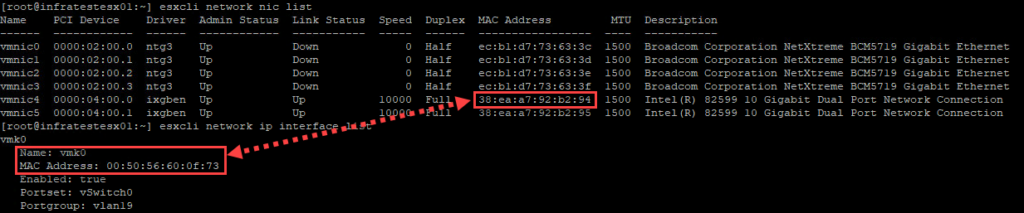

Per default the vmk0 VMware kernel has the same mac address as the leading, connected interface during host installation. Here we can see that vmnic4 has the same mac address as vmk0.

With vSphere 8 we see that this circumstance is starting to cause much more issue with the Cisco network stack than ever before.

Resolution

The resolution is quite simple. You need to remove the vmk0 after host setup and recreate it manually through CLI. Set the host into maintenance mode, document your settings on vmk0 and the start the recreation process through host hardware management interface (here it is HPE iLO)

First we need to remove the kernel (if dvSwitch is in use, we need to find the in use port ID before we remove the vmk0)

esxcfg-vswitch -l | grep vmk0 | awk '{print $1}'Now we can remove the vmkernel

esxcli network ip interface remove --interface-name=vmk0Then recreate it and attach it to the dvSwitch or vSwitch. For dvSwitch we use the syntax

esxcli network ip interface add --interface-name=vmk0 --dvs-name=DVSWITCHNAME --dvport-id=PORT_ID_FROM_STEP_TWOFor vSwitch we use

esxcli network ip interface add --interface-name=vmk0 --portgroup-name=PORT_GROUP_NAMEesxcli network ip interface ipv4 set --interface-name=vmk0 --ipv4=IP --netmask=NETMASK --type=staticand add gateway for the newly created kernel

esxcfg-route -a default IP_OF_GWNow we check the mac address of the vmk0 kernel again with

esxcli network ip interface listWe can see, that the vmk0 has a virtual mac address and this solves the problem!

esxcli network nic list

Conclusion

Finally we check again the ping job with 100 request. You can see that we no longer have those ping losses. Success!

>

>